Object Recognition & Machine Learning: Why Humans Still Trump Even the Smartest Algorithms

By Jason Solack, Senior Director, Data Analytics, Gongos, Inc.

Ask the question: “Can machines think for us?” And the answer, of course, is yes. Ask it another way: “Can machines do what humans can do?” And, the answer becomes filled with caveats and conjecture.

As a recognized scientific discipline since 1959, machine learning explores the construction and study of algorithms that can learn from data. In today’s ever-expanding world of big data capture, the pressure is on for data scientists to interrogate data in new and meaningful ways. And, so begins the race to see who can create the smartest algorithms known to—and created by—man.

Futurists and Academics Pave a Parallel Path

In 2012, a team of scientists at Google’s X Lab created an impressive 16,000 machine neural network. Not unlike the human brain, neural networks are a machine learning technique modeled to find out if machines are the “data scientists” of the  future after biological neural networks. Like humans, they are adept at finding patterns in data—from musical patterns to song identification (think Shazam and Soundhound) to image recognition (think Facebook photo tags).

future after biological neural networks. Like humans, they are adept at finding patterns in data—from musical patterns to song identification (think Shazam and Soundhound) to image recognition (think Facebook photo tags).

Google created its neural network to identify objects across millions of static images and use natural language processing to describe them in structured sentences. In theory, an algorithm such as this could not only increase productivity across private and public sectors, but it holds promise to becoming a life-changing technology. In its full potential, a visually impaired person would be able to feed video imagery into a computer, in order to “see” and experience the world around them.

Unaware of Google’s efforts, Stanford University had concurrently been working on a strikingly similar project. And as their efforts continue, they too acknowledge that while the human brain has the ability to describe an immense amount of detail about a visual scene with merely a glance, the same proves to be an elusive task for visual recognition models.

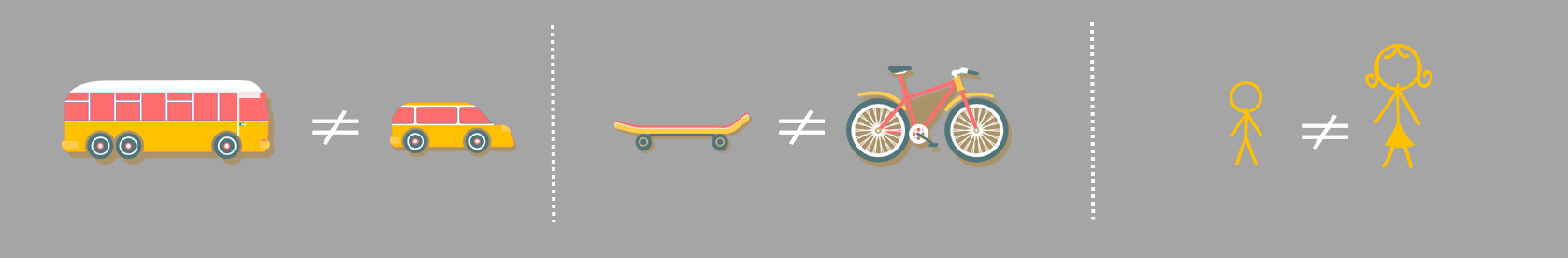

While these neural networks were largely successful, they misidentified relatively commonplace objects. A yellow automobile was mistaken for a school bus; a man riding a BMX bike was thought to be riding a skateboard; and a mother playing with her child read as two children to the algorithm. Errors such as these would have not taken place with human interpretation—not even amongst most toddlers! So why do computers have such a hard time deciphering what is so rudimentary to us?

To answer this question, it may be beneficial to think about why we use computers to help us solve problems in the first place.

To Err is Human…and also Machine

Despite our human veracity, we accept the fact that our brains have natural limits. Our ability to process massive amounts of granular data is miniscule, relative to a computer. And, the speed at which we can humanly process information is sluggish at best when compared to today’s CPUs. Not to mention, humans must sleep and recharge in order to be effective data gatherers and decision-makers. So, it would be fair to say that we created computers to extend our bandwidth of intelligence to compensate for our own humanness.

at which we can humanly process information is sluggish at best when compared to today’s CPUs. Not to mention, humans must sleep and recharge in order to be effective data gatherers and decision-makers. So, it would be fair to say that we created computers to extend our bandwidth of intelligence to compensate for our own humanness.

But, consider a human’s instinctual knowledge of recognizing a human face, or a mommy from a friend, and you can clearly see the reciprocal relationship between humans and machines.

Our brains are wired to identify human-centric characteristics with ease; and they have the capacity to consider context, hypothesize, and apply emotional understanding. So when it comes to exploring and analyzing human-centered data, humans will always be part of the learning equation.

Good news for data scientists. And corporations whose very life blood is consumers…and well, humans.

The Interdependence of Man and Machine

While machine learning algorithms are powerful—and necessary—tools to help us detect subtle patterns and relationships across billions of data points, computing power is intended to complement our minds—not replace our thinking.

And while computers can drill down to find the most intricate facts in data, it’s still a long time off until they can uncover insights.

Let’s face it, computers are commodities. In today’s world, there are tech-forward individuals who can deploy a cluster of cloud computers with a few mouse clicks. But finding data scientists to leverage these clicks to their full potential is a far more challenging proposition.

The enduring appetite for humans to take a step back and simply ask, “Does this data make sense?” is a competency that cannot be commoditized, unlike yes, even the smartest algorithms.